Mastering Go Concurrency: The Two Production Patterns You Need to Know

Table of Contents

Welcome! Go (Golang) is renowned for building highly efficient backend systems, largely thanks to its simplicity and its powerful concurrency model. However, this power comes with responsibility: if concurrency is not used correctly, your system's performance can actually degrade.

I'll guide you through the two essential Go concurrency patterns—Fan Out/Fan In and the Worker Pool—that are utilized in nearly every production-level Go system. By the end of this post, you can start applying these patterns directly to build efficient backends.

Why We Need Concurrency: The Problem with Sequential Tasks

Imagine you are building an application that handles heavy tasks, such as image processing (resizing, cropping, or applying filters).

In a typical, normal execution flow (synchronous execution), code runs sequentially on the main thread. If you have three images to process, the system executes them one after the other:

- Process Image 1 (takes 50 milliseconds)

- Wait for Image 1 to finish

- Process Image 2 (takes 50 milliseconds)

- Wait for Image 2 to finish

- Process Image 3 (takes 50 milliseconds)

In this synchronous setup, three images taking 50ms each would take approximately 150 milliseconds total. This approach is fine for a single task, but when dealing with multiple tasks, this sequential execution significantly slows down your application.

Go Routines and the Concurrency Model

To solve the sequential bottleneck, Go allows us to run tasks concurrently—meaning they run at the same time (or at least appear to).

Go Routines: The Lightweight Threads

In Go, we achieve concurrency using Go Routines. These are not traditional threads; they are lightweight threads managed by the Go runtime.

To launch a function as a Go Routine, you simply preface the function call with the go keyword:

go worker("image1.png")

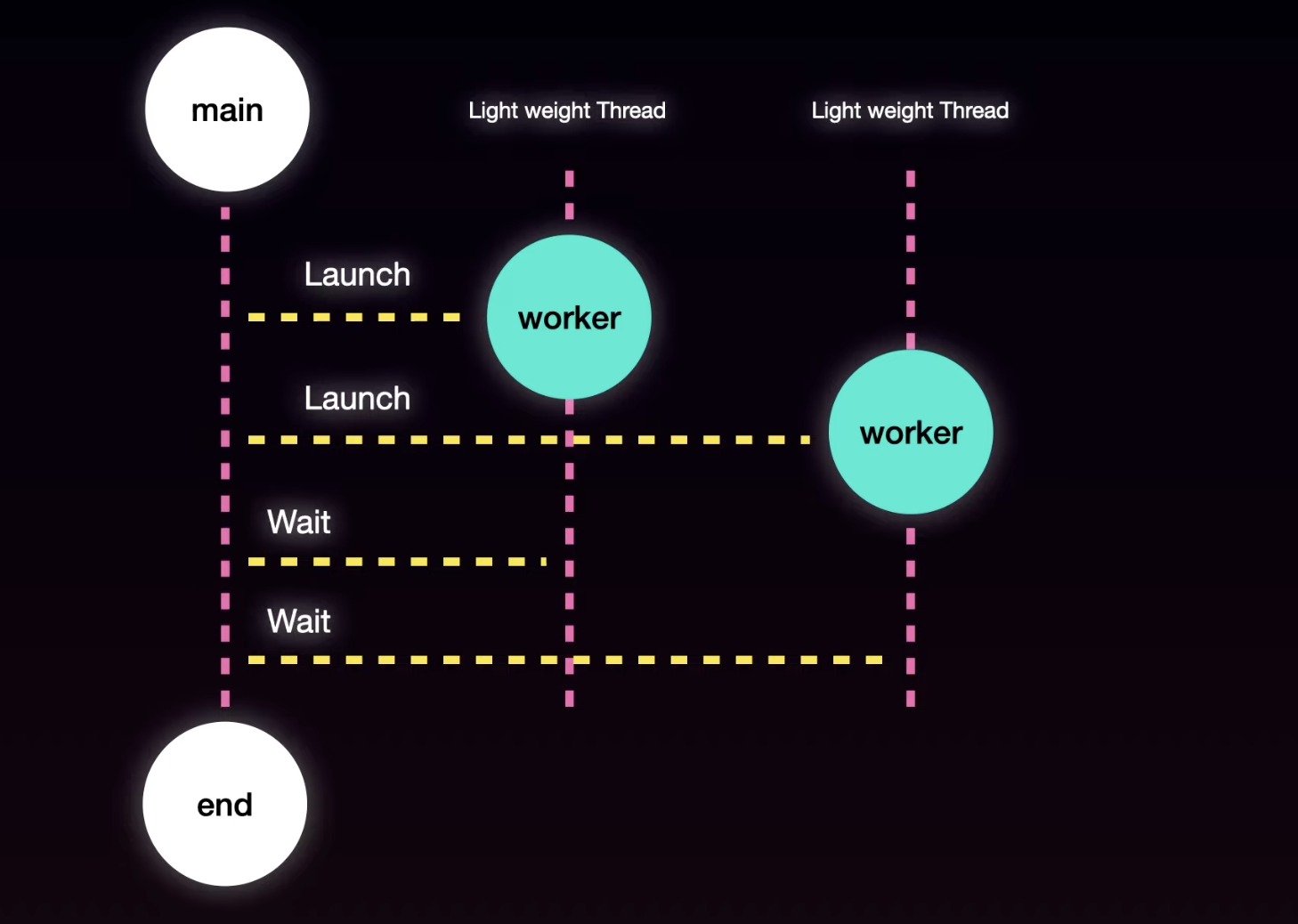

The "Launch and Wait" Principle

The Go concurrency model can be summarized as Launch and Wait.

- Launch: The main function runs on the Main Go Routine. When it sees the

gokeyword, it launches that function onto a new, lightweight thread (a separate Go Routine). - Asynchronous Execution: The key insight is that the Main Go Routine will not stop and wait for the worker to finish. It schedules the worker asynchronously and continues executing the rest of its code.

The Pitfall: If the Main Go Routine reaches the end of its execution before the launched workers are finished, the program terminates, and the running workers are automatically terminated as well. This results in the worker's work never being completed or logged.

The Solution: Synchronization with Wait Groups

To overcome the termination problem, we must ensure the Main Go Routine waits for all other launched workers to finish. This is achieved through Wait Groups (sync.WaitGroup).

A Wait Group functions like a counter:

- Add: Before launching any workers, we use the

wg.Add(n)method to set the counter equal to the number of workers we intend to spin up. - Wait: In the main function, we call

wg.Wait(). This blocks the Main Go Routine, forcing it to pause until the counter returns to zero. - Done: Inside the worker function, once its job is complete, it calls

wg.Done(), which decreases the Wait Group counter by one (-1).

package main

import (

"fmt"

"sync"

"time"

)

func worker(id int, wg *sync.WaitGroup) {

defer wg.Done() // Ensures Done() is called even if panic occurs

fmt.Printf("Worker %d starting\n", id)

time.Sleep(50 * time.Millisecond) // Simulate work

fmt.Printf("Worker %d done\n", id)

}

func main() {

var wg sync.WaitGroup

for i := 1; i <= 3; i++ {

wg.Add(1) // Increment counter

go worker(i, &wg)

}

wg.Wait() // Block until all workers call Done()

fmt.Println("All workers completed")

}

Best Practice Tip: Always use the

deferkeyword withwg.Done(). This guarantees that even if an error occurs during processing inside the worker, theDone()method runs as a "cleaning function" before the worker exits, ensuring the counter is decremented and avoiding the main function hanging indefinitely.

Pattern 1: Fan Out and Fan In

This pattern is fundamental for distributing work and then aggregating the results.

Fan Out: Distributing the Workload

Fan Out is the process of distributing a task across multiple Go Routines concurrently.

Using Wait Groups, we can safely launch many workers (e.g., 5 workers processing 5 different images). Since these tasks run concurrently, the time taken remains roughly the duration of the single longest task, not the sum of all tasks. For example, five 50ms jobs running concurrently take only about 51 milliseconds total, showcasing the power of Go Routines.

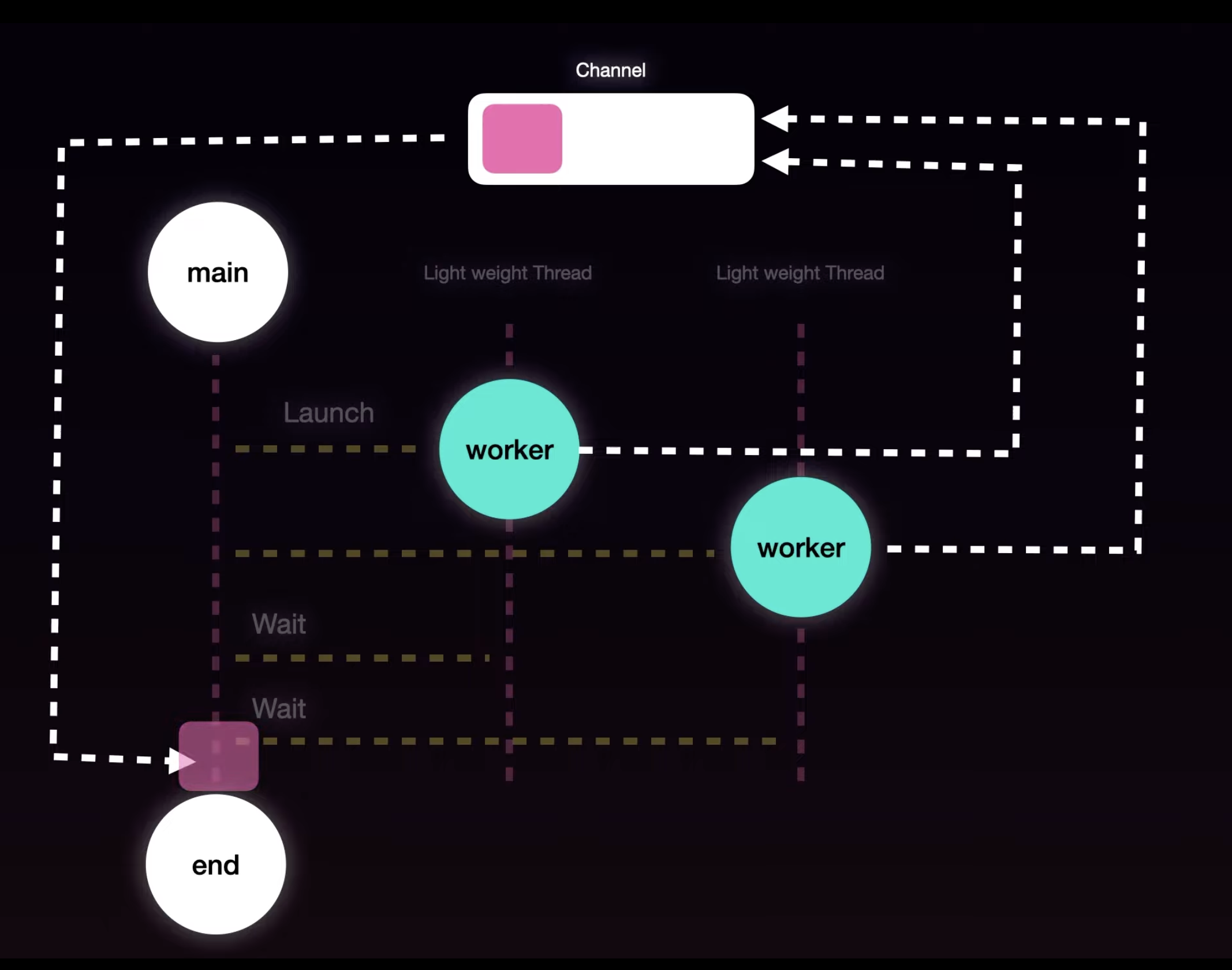

Fan In: Aggregating the Results via Channels

In a real application, workers usually need to return data (like the final image URL or processing status) back to the Main Go Routine. This aggregation is called Fan In, and it is accomplished using Channels.

Understanding Channels

Channels are a powerful feature in Go that enable communication between Go Routines. Think of a channel as a secure, shared memory space or a First-In, First-Out (FIFO) queue.

- Sender: A worker pushes data into the channel (the queue)

- Queue: The data sits in the channel waiting to be processed

- Receiver: The Main Go Routine reads the data from the channel

Channels allow data to be passed back and forth securely between different Go Routines without needing complex shared memory locking.

package main

import (

"fmt"

"sync"

"time"

)

type Result struct {

Value string

Error error

}

func processImage(id int, results chan<- Result, wg *sync.WaitGroup) {

defer wg.Done()

// Simulate image processing

time.Sleep(50 * time.Millisecond)

// Send result to channel

results <- Result{

Value: fmt.Sprintf("Image %d processed", id),

Error: nil,

}

}

func main() {

var wg sync.WaitGroup

results := make(chan Result, 5)

// Fan Out: Launch workers

for i := 1; i <= 5; i++ {

wg.Add(1)

go processImage(i, results, &wg)

}

// Close channel after all workers finish

go func() {

wg.Wait()

close(results)

}()

// Fan In: Collect results

for result := range results {

if result.Error != nil {

fmt.Printf("Error: %v\n", result.Error)

} else {

fmt.Println(result.Value)

}

}

}

Channel Types and Deadlocks

When creating a channel using make(chan type, size):

- Buffered Channel: You define a size (e.g., 5). Data is stored in the channel up to that limit. The sender will block only when the buffer is full.

- Unbuffered Channel: No size is defined. This acts like a direct connection; both the sender and receiver must be ready at the same time for the data transfer to occur, as the data is not stored.

Crucial Step: Closing Channels

If you read from a channel using a for range loop, the loop will continue indefinitely unless the channel is closed. If the Main Go Routine continues reading, but all workers have finished (and stopped sending data), Go detects this and throws a fatal deadlock error ("All goroutines are asleep or deadlock"). Therefore, you must use the close function on the channel once all data has been sent.

Handling Complex Data

While simple strings can be sent over channels, production systems usually require more information (like status, the processed value, and potential errors).

We typically use a struct in Go to define a complex result type (e.g., a Result struct with fields for Value and Error). Go developers do not throw errors; they return them from functions. By including an error field in the struct, the receiving routine can check if the error field is not nil and handle the failure (e.g., reprocessing the job or sending it to a Dead Letter Queue/DLQ).

Note on Order: When running Go Routines concurrently, the Go scheduler does not guarantee the order of execution. Results processed by workers may arrive in the channel out of sequence.

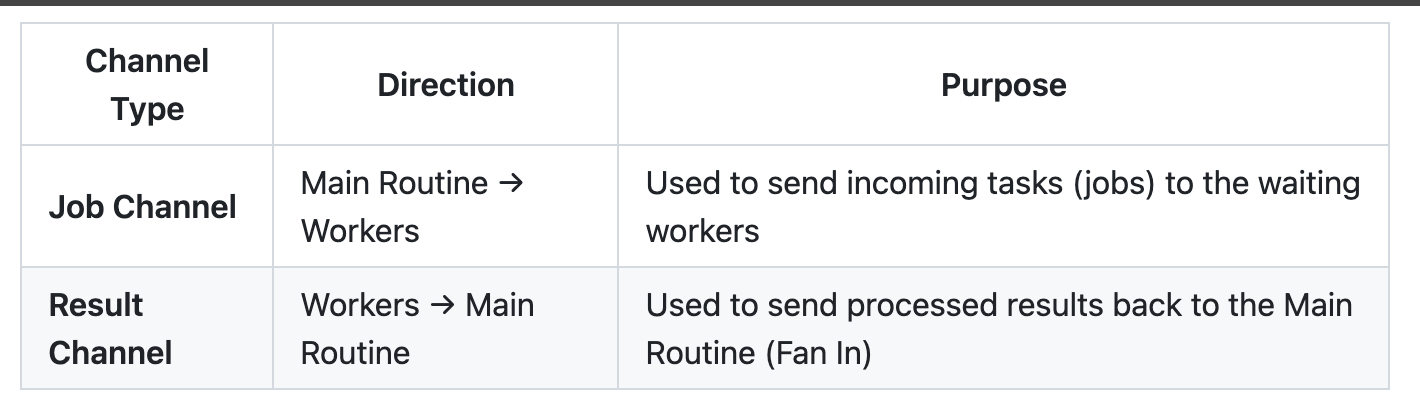

Pattern 2: The Worker Pool

The Fan Out pattern works well for a fixed, manageable set of jobs. But what if you have thousands or even millions of jobs (e.g., a slice of 1000 images fetched from a database)?

The Scalability Problem

If you use a simple for loop combined with Fan Out to process 1,000 jobs, you will spin up 1,000 Go Routines.

Launching too many Go Routines simultaneously is problematic. If you do not limit the number of workers, they can become "out of control," degrading your system's performance.

The Solution: Limiting Workers

The Worker Pool pattern solves this by fixing the number of workers (Go Routines) available, regardless of the total number of jobs. The workers sit ready, waiting for tasks to be fed to them.

For example, if you have 20 jobs, you might define only five total workers.

How the Worker Pool Works

A Worker Pool relies on two channels:

The Workflow:

-

Warm Up Workers: A fixed number of workers (e.g., 5) are started using a loop. These workers immediately begin listening for new tasks on the Job Channel using a

for rangeloop. Since the channel is open, the worker routine stays running in the background until the channel closes. -

Send Jobs: The Main Go Routine iterates over the list of jobs (e.g., 20 jobs) and sends them sequentially onto the Job Channel.

- Analogy: The Job Channel acts as a buffer (or queue) where the jobs wait for an available worker.

-

Processing: Whenever a worker finishes a task, it automatically picks up the next job waiting in the Job Channel queue and begins processing it.

-

Shutdown: Once the Main Go Routine has sent all 20 jobs, it closes the Job Channel. The workers, which were waiting via the

for rangeloop, detect that the channel has closed and they shut down gracefully.

package main

import (

"fmt"

"sync"

"time"

)

type Job struct {

ID int

Value string

}

type Result struct {

JobID int

Value string

Error error

}

func worker(id int, jobs <-chan Job, results chan<- Result, wg *sync.WaitGroup) {

defer wg.Done()

for job := range jobs {

fmt.Printf("Worker %d processing job %d\n", id, job.ID)

// Simulate processing

time.Sleep(50 * time.Millisecond)

// Send result

results <- Result{

JobID: job.ID,

Value: fmt.Sprintf("Processed: %s", job.Value),

Error: nil,

}

}

fmt.Printf("Worker %d finished\n", id)

}

func main() {

const numWorkers = 5

const numJobs = 20

jobs := make(chan Job, numJobs)

results := make(chan Result, numJobs)

var wg sync.WaitGroup

// Start worker pool

for w := 1; w <= numWorkers; w++ {

wg.Add(1)

go worker(w, jobs, results, &wg)

}

// Send jobs

for j := 1; j <= numJobs; j++ {

jobs <- Job{

ID: j,

Value: fmt.Sprintf("image%d.png", j),

}

}

close(jobs) // No more jobs

// Close results channel after all workers finish

go func() {

wg.Wait()

close(results)

}()

// Collect results

for result := range results {

if result.Error != nil {

fmt.Printf("Job %d failed: %v\n", result.JobID, result.Error)

} else {

fmt.Printf("Job %d completed: %s\n", result.JobID, result.Value)

}

}

fmt.Println("All jobs completed")

}

This ensures that even if you have thousands of jobs, your system is running efficiently with a limited, optimized number of concurrent Go Routines. For instance, 20 jobs processed by five workers might take around 200 milliseconds, ensuring controlled, efficient use of system resources.

Your Go Concurrency Toolkit

By mastering the two patterns—Fan Out/Fan In and Worker Pool—you possess the fundamental knowledge needed to build high-performance systems in Go.

Key Takeaways

- Concurrency vs. Sequential: Use Go Routines (lightweight threads) to run code concurrently and avoid sequential blocking

- Synchronization: Always use

sync.WaitGroup(Add,Wait,Done) to ensure the Main Go Routine waits for all workers to complete. UsedeferwithDone()for reliability - Communication: Use Channels to communicate data between Go Routines. Remember to close channels to avoid deadlocks

- Fan Out/Fan In: Ideal for smaller, fixed sets of parallelizable tasks where you spin up one worker per task

- Worker Pool: Essential for scalability, where you limit the number of workers but feed them an unlimited stream of jobs via a dedicated Job Channel

When to Use Each Pattern

Use Fan Out/Fan In when:

- You have a small, known number of tasks

- Each task is independent

- You want maximum parallelism

- Resource usage is not a concern

Use Worker Pool when:

- You have many tasks (hundreds or thousands)

- You need to control resource usage

- Tasks arrive continuously

- You want to prevent system overload

Next Steps

Now that you understand these patterns, try implementing them in your own projects:

- Start with a simple Fan Out/Fan In for parallel API calls

- Build a Worker Pool for batch processing tasks

- Combine patterns for complex workflows

- Monitor performance and adjust worker counts

Go's concurrency model is powerful but requires practice. Start small, test thoroughly, and gradually build more complex concurrent systems. Happy coding! 🚀